· Shannon · archive · 5 min read

Aftermath of a Bad Chip: Part 1

The first half of a tale of hardware sadness

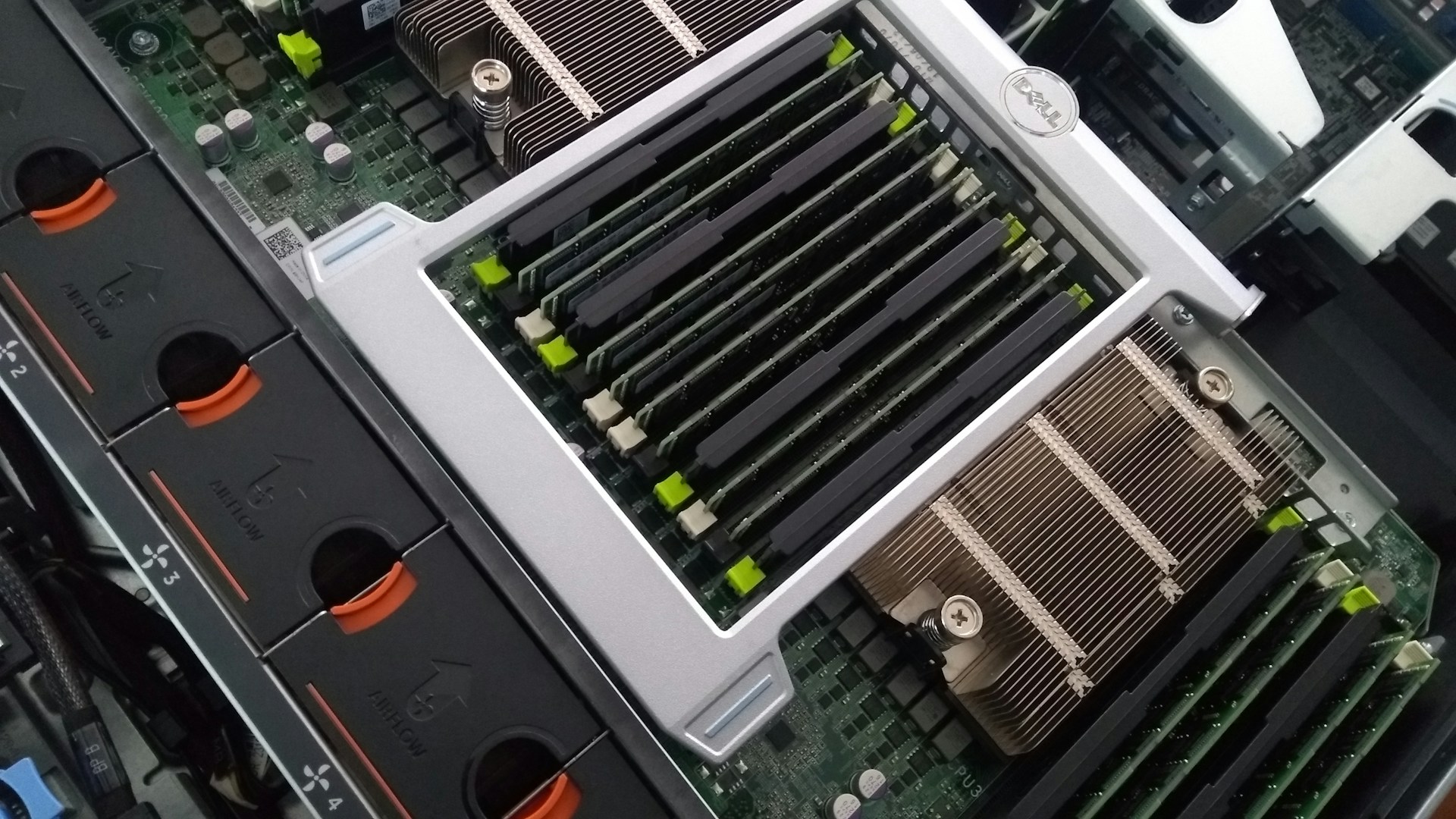

As I write this blog entry, I’m currently sitting here waiting for Srv3 to come back online after having it’s RAM replacement as part of this afternoon’s emergency maintenance.

Over the past couple of weeks we have experienced a number of instances where srv3 would go offline, responding to pings, but appearing to be swamped and un-responsive to actual web/database/email connections or any type of access at the console. At first we believed these to be related to everything from DDoS attacks and excessive client resource usage, to a faulty motherboard. It seemed that every crash was at a time when the machine was under heavier than normal workloads due to these types of things. We would see the crash, recover the system, and see how much workload it was under, dig into the workload and logs, see some suspicious traffic or a script running out of control, and figured that was the cause of the crash. After all, it wouldn’t be the first time a DDoS attack or a runaway client program had managed to take one of our systems offline, and while we’ve gotten pretty good at making our servers pretty resilient under normal circumstances, occasionally we still see one get brought to its knees in this manner. (It’s rare, but it happens. When it does, we learn from the experience and re-tool our protections to prevent the same thing from doing it a second time.)

However, last night, when the server went offline in a similar fashion around 6pm, we rebooted it and found ourselves with a new, previously un-seen symptom, only half of the RAM in the system was recognized and usable. We had to reboot the server a second time later in the night to get the entire amount of system RAM back online. This led to us questioning the stability of the RAM modules in the machine, as even if the chip is not dead, but is acting “flaky”, it could run fine for days/weeks, but under load conditions it could very well fail and cause problems for the entire server.

So in light of this new, possibly connected symptom (and being that we’re not huge believers in rare coincidence, especially when it comes to things of a technical nature), we ran some hardware tests last night on srv3. This revealed that one of the two memory modules in the server was “questionable”; while the memory was not failing completely, it was generating some errors while under heavy read/write operations, more than we are comfortable in seeing for any machine in production use. Srv3 does use ECC memory which is designed to “catch and correct” errors reading/writing to the chips, but if the chip is encountering errors on a constant basis when under heavy load, it could very well be the cause of the problems we’ve been seeing with the machine simply becoming unresponsive while under heavy load.

The admin team believes now that these periods of abnormally high workload/stress on the machine were more the “trigger” for the failure and crashes then the root cause of the crash, with the high workload causing the memory errors to surface and compound the load problem until the server simply crashed.

Early this morning we scheduled an emergency maintenance window with the datacenter for late this afternoon (4pm to 6pm, CDT) for them to take the server offline and replace the RAM modules. Normally we would schedule these windows during a more off-peak time period, but due to the nature of the situation, and the fact that in today’s 24x7x365 global web environment, there’s really no “off-peak” time for our clients or us, we wanted to get this memory replaced as soon as possible. We’re hoping that once the RAM modules are replaced, things can go back to a normal, everyday quiet routine for Srv3 and the clients housed on it.

A few moments ago I received word that Srv3 was back up and running with the new RAM modules, and so far preliminary testing shows no errors with the new modules. Hopefully this means our little period of strangeness is over and things can get back to normal around these parts.

So that’s where we’re at from a technical standpoint, we go forward, keep an eye out for any more strangeness (I personally won’t rest easy for at least two weeks, every time I sit down at a console I’ll be doing a quick “Is Srv3 up and okay?” check for myself, because even though I know the monitoring system should alert us quickly if it’s not, I simply will feel better checking for myself, after the strange ways things have gone down over the last few weeks) . From a more overall business objective standpoint and from what we’ve learned from this experience, we obviously have some work ahead of us, and some new goals set for ourselves as a result of this entire experience.

And those topics, what I had really wanted to discuss here, will I fear, be waiting for a follow up blog post, as this entry has already gotten far longer winded then the quick note I originally planned, and there’s quite a bit more that needs to be discussed. I’m going to check Srv3 one more time, and then brave the after-rush-hour traffic home. Hopefully a few hours of mindless relaxation (away from a SSH terminal session) will give me a little perspective and time to collect my thoughts on the rest of the issues; Tomorrow I’ll sit down and put together a better outline of exactly what happened, what went wrong, and how we’re going to fix it, learn from it, and move forward from here.

2024 Edit - Part Two continues our tale